Using SUS to Analyze and Report Feedback from Usability Testing

Linguamatics’ software solutions team was working on a new application. The team wanted a way to gather and present meaningful feedback from usability testing during development.

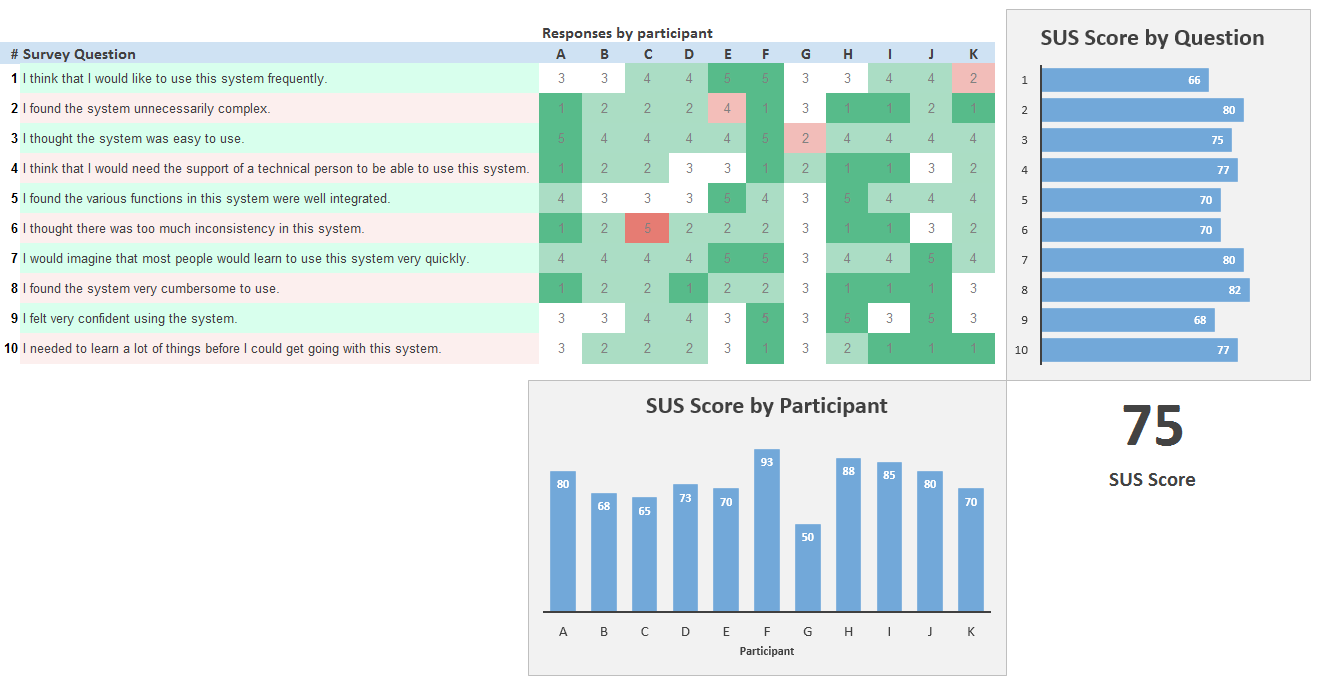

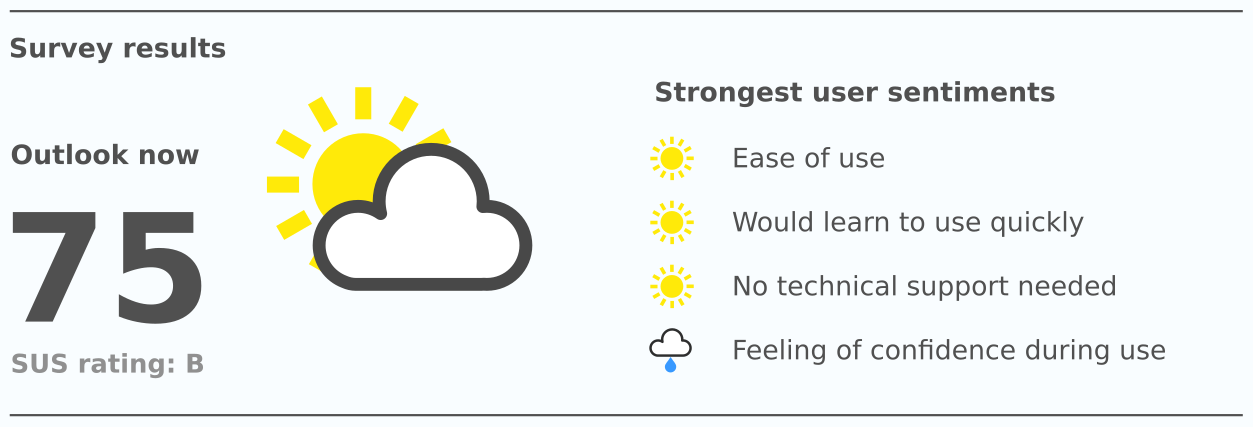

When presenting feedback to stakeholders we wanted to show reliable and tangible usability metrics that could compare rounds of usability testing to show progress. We also wanted to show evidence of how the metrics were determined.

After researching a wide variety of usability and user experience metrics, the System Usability Scale (SUS) was selected as a preferred method. SUS is a long-standing, reliable, and flexible method. With 10 questions it provides a good level of detail, without overwhelming participants.