Process

We designed the Open Targets Platform following a collaborative and iterative design process (Karamanis et al. 2018). Qualitative feedback from users has been at the heart of this process. In addition to helping us understand what needs to be improved, this feedback helps us assess whether we have met our main objective.

By the time the platform was made available publically, we had collected a lot of feedback from users testifying that it was comprehensive and intuitive. Users also shared cases in which the platform helped them with their day to day activities in drug target identification (see Karamanis et al. 2018 Supplementary Table 1).

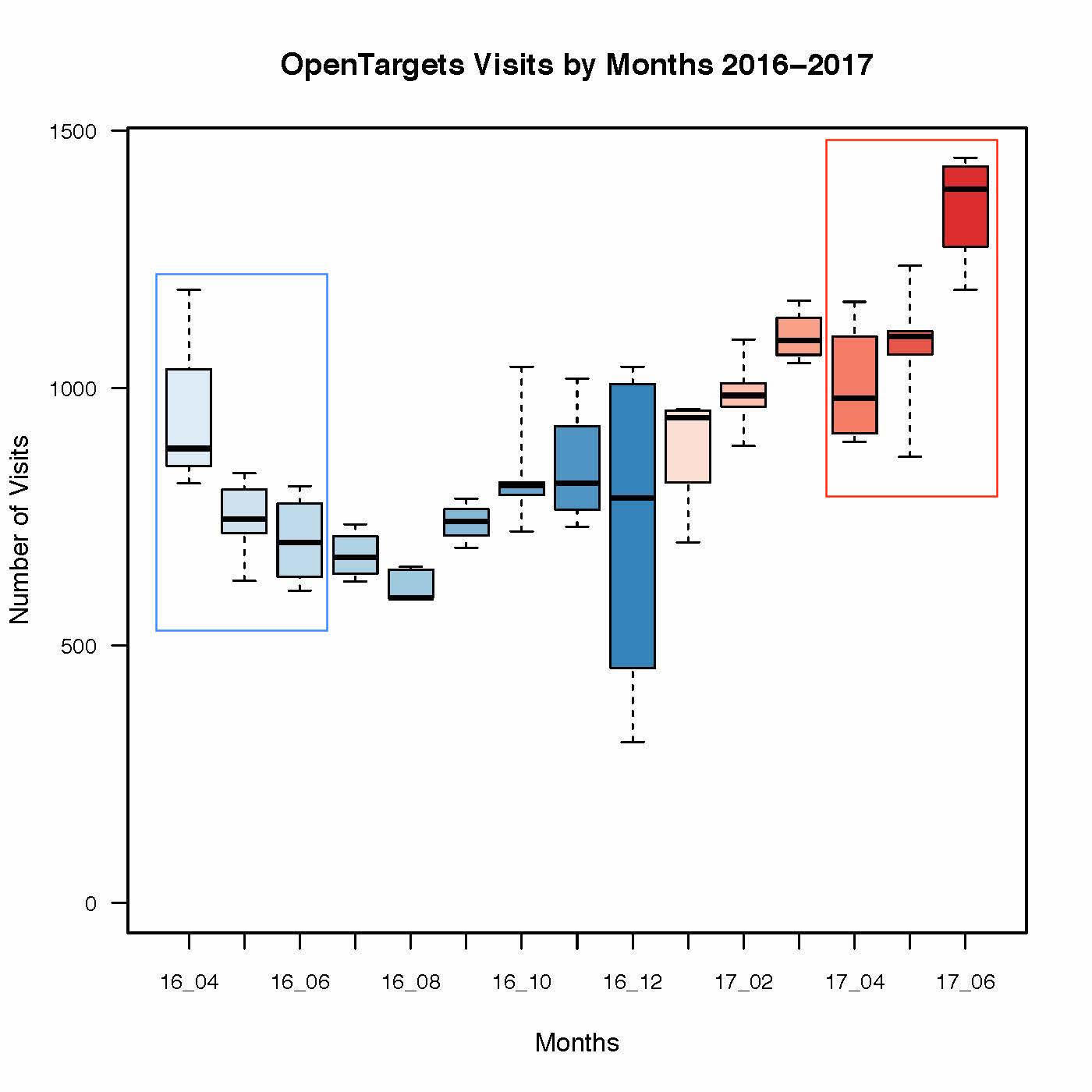

We supplemented the qualitative feedback from users with quantitative metrics. Brainstorming a long list of metrics can quickly get unwieldy and difficult to prioritize. In order to avoid this problem, we identified key performance indicators using the HEART methodology. This methodology breaks down the experience of using a product into five aspects: Happiness, Engagement, Adoption, Retention and Task completion (Rodden et al. 2010).

The importance of each aspect of the HEART methodology varies from product to product. For the Open Targets Platform, we decided to focus on Adoption, Engagement and Retention (in that order) for the definition of quantitative metrics because these aspects can be captured regularly and more directly through web analytics. Although we use qualitative feedback (see Karamanis et al. 2018 Supplementary Table 1) as the main indicator of Happiness, we intend to start surveying our users periodically in order to monitor differences in the Net Promoter Score (Reichheld 2003) between major updates of the Platform. Task completion is less relevant to our application since using the platform is much more open-ended than, for instance, making a purchase online (which has a clear completion action).

We defined high level goals and lower level signals for the prioritized aspects as well as actual metrics for each aspect. The members of our multidisciplinary team were invited to contribute to these definitions, similarly to how they participated in our collaborative user research, design and testing activities. This process helped us clarify the purpose of collecting analytics before investing effort in the actual way in this will be done. We review the statements of goals, signals and metrics periodically, with the most recent version shown in Table 1.

|

Goals |

Signals |

Key Metrics |

| Adoption |

We want people to be visiting the site and viewing its pages. |

Visiting site, Viewing pages |

Visits per week, Unique visitors per week, Pageviews per week, Unique pageviews per week |

| Engagement |

We want people to be using the site and performing certain actions. |

Spending time on site, Searching the site, Downloading information, Clicking on evidence links |

Average visit duration, Bounce rate per week, Actions per week, Actions per visit |

| Retention |

We want people to come back to the site after their first visit. |

Returning to the site |

Returning visits, % returning visits / all visits |

Table 1: Following the HEART methodology, we prioritized Adoption, Engagement and Retention as the aspects of user experience to focus on for the definition of quantitative metrics. We collaboratively defined high level goals for each of the prioritized aspects and then identified lower level signals as well as actual metrics for each aspect.